Many moons ago, I posted a surprisingly popular answer on StackOverflow regarding how to write to CSV files using PostgreSQL. The answer, in a nutshell, is the COPY statement. But there’s a catch – PostgreSQL imposes strict security limitations on the use of this statement, which are best dealt with using another feature, the SECURITY DEFINER option to CREATE FUNCTION. Here, I will attempt to explain in more detail what these security restrictions are, and how to do what you want without simply disabling that security.

CSV made easy: the COPY statement

There is no standard mechanism in SQL for reading or writing CSV (or similar) files, but most DBMSes provide at least one approach. In the case of PostgreSQL, this comes in the form of the very flexible COPY FROM and COPY TO. The relevant manual page sums these up pretty neatly:

COPYmoves data between PostgreSQL tables and standard file-system files.

COPY TOcopies the contents of a table to a file, whileCOPY FROMcopies data from a file to a table (appending the data to whatever is in the table already).

COPY TOcan also copy the results of aSELECTquery.

There are, basically, two places a file could be which PostgreSQL is expected to read or write: on the server (i.e. where PostgreSQL itself is running) or on the client (i.e. where your SQL is originating, such as a webserver running a PHP script).

If your file is on the server, then the “full” version of COPY is available to you: you can write something like COPY (SELECT * FROM public.foo) TO '/tmp/foo.csv' WITH (FORMAT CSV); or COPY public.foo FROM '/tmp/foo.csv' WITH (FORMAT CSV);.

But there’s a snag: you can only use this form if connected to PostgreSQL as the “superuser” (usually called “root”). Why? Because PostgreSQL has no way of knowing which PostgreSQL “roles” should map to which users or permissions on the underlying OS; the process running the DBMS itself may well have access to read and write all sorts of files which you really wouldn’t want someone to get their hands on. In a worst case scenario, an attacker who got hold of an SQL account with COPY privileges might be able to create a back-door to take complete control of your server, and PostgreSQL would rather not be responsible for that!

Side-stepping the problem: accessing files on the client

If your file is on the client, then the PostgreSQL server can’t just go onto that remote machine and grab the file – like uploading a file to a website, you need to provide the data in some way. Since as far as it’s concerned, you’re not actually reading a file, just throwing it data, PostgreSQL can apply the same security policy it would if you just wrote a bunch of INSERT statements, and doesn’t need the superuser-only restriction.

This is why there are a couple of special cases in the COPY command: COPY FROM STDIN and COPY TO STDOUT. The downside is that it’s up to you to push that data in (or catch it coming out); for instance, graphical tools like PgAdmin III or phpPgAdmin generally have an “Execute query to file” option, which internally sets up a COPY TO STDOUT command and saves the result to disk.

The command-line psql interface, meanwhile, has a special \copy command, which you can run as though it were an ordinary COPY statement, but with the file handled by the client, not the server. This could be built in to a shell script, and not needing to copy data to/from the server might be an advantage, but building it into a more complex data import/export system could be tricky.

How to be root: SECURITY DEFINER functions

Obviously, if it were really only possible to use COPY when connected as superuser, it wouldn’t be very useful – you certainly don’t want all your scripts to be regularly connecting as “root” and leaving the door open for attackers to compromise that account. Luckily, there is another feature we can use to our advantage, which is described thus in the manual page for CREATE FUNCTION:

SECURITY INVOKERindicates that the function is to be executed with the privileges of the user that calls it. That is the default.

SECURITY DEFINERspecifies that the function is to be executed with the privileges of the user that created it.

This brief explanation belies the power of this option: you can create a function such that, whatever user actually runs it, PostgreSQL acts as though it were run as a different user – specifically, the “owner” of the function.

Hopefully it is obvious how this applies to the topic in hand – define a SECURITY DEFINER function while logged in as “root”, and it will be able to use the full COPY command, even when your application is logged in as a properly locked-down user. At its simplest, it could look something like this:

CREATE FUNCTION export_foo() RETURNS VOID SECURITY DEFINER LANGUAGE SQL AS $BODY$ COPY (SELECT * FROM public.foo) TO '/tmp/foo.csv' WITH ( FORMAT CSV ); $BODY$;

Obviously, this is a rather awkward function – it exists only to add the SECURITY DEFINER attribute to one line of some larger piece of application logic. You could, though, expand this with procedural code (using PL/pgSQL, or one of the other pluggable languages) to process the whole task – for instance, define a Temporary Table, fill it from an uploaded CSV, sanity-check the data, and insert it into a permanenent table.

The dangers of over-generalising

Rather than expand the function for a specific purpose, you might be tempted to simply generalise it into one which wraps up the basic options of COPY.

Here’s a simple example of how not to write a general-purpose import function:

CREATE FUNCTION copy_from_ignoring_all_security(table_name text, file_path text)

RETURNS VOID

LANGUAGE plpgsql

SECURITY DEFINER

AS $BODY$

BEGIN

-- This is a really bad idea. Do not copy this function.

EXECUTE '

COPY

' || quote_ident(table_name) || '

FROM

' || quote_literal(file_path) || '

WITH (

FORMAT CSV, HEADER

);

';

-- This is a really bad idea. Do not copy this function.

END;

$BODY$;

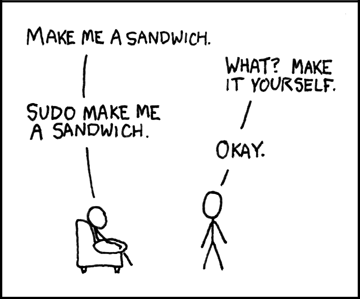

This is a really bad idea! The security restriction on COPY is there for a good reason, so simply over-riding it as though you know better is clearly not the way to go. Indeed, you could generalise this further, and write a function which took a string of SQL, and executed it as a superuser – thus giving any user which could execute that function all the rights of a superuser. This is actually quite a common mistake; for instance, some Linux systems configure the sudo command so that users can run any command as root at will.

Image courtesy of xkcd (Randall Munroe), used under a Creative Commons Attribution-NonCommercial 2.5 License. Also available as a T-shirt.

This is no more a correct configuration of sudo than our hypothetical PostgreSQL function is a correct use of SECURITY DEFINER, since it effectively makes users who can sudo into superusers. (It does afford some protection, since the user will generally be prompted for their password, like in Windows UAC; but on a multi-user system, it makes any weak user password into a complete system risk).

Doing it right: letting root say no

Rather than “running code as root”, perhaps a better way of phrasing what tools such as sudo and SECURITY DEFINER should be used for is “asking root to run code for you”. The crucial difference being that it is perfectly acceptable – indeed, essential – for root to “say no”, rather than blindly carrying out the requested action.

In the case of COPY, it is up to your function to constrain what users can do – the tighter the restrictions the better – while allowing for all the scenarios your application needs. There are actually two things you need to define:

- Which files should the user be allowed to read/write on disk? This might be a particular directory, for instance, and the filename might have to have a suitable prefix or extension.

- Which tables should the user be able to read/write in the database? This would normally be defined by

GRANTs in the database, but the function is now running as “root”, so tables which would normally be “out of bounds” will be fully accessible. You probably don’t want to let someone invoke your function and add rows on the end of your “users” table…

You might want to just hard-code as much as possible, but remember to guard against awkward things like /../ and the dreaded null byte. If in doubt, a tight whitelist always trumps an incomplete blacklist. When you find something that doesn’t meet your requirements, you want to abort, and make sure your application knows you’ve aborted, so you will probably want to make use of RAISE EXCEPTION or something similar.

Finally, a couple of extra things to be careful of:

- You’ll be building dynamic SQL in your function, so need to make sure you’re guarded against SQL Injection. Make appropriate use of the

quote_identandquote_literalfunctions, which will do appropriate double- and single-quoting for you. - Think about which connecting users need to be able to import files at all, and

GRANTonly those users theEXECUTEpermission on your function. That will probably take the form of a “group role” which can then beGRANTed to actual “login roles”, but that’s a whole nother topic.

Putting it into practice: an annotated example

OK, this is the tl;dr bit of the post, I guess: I give you some code that you can take away and customise. I take absolutely no responsibility for the security of this function, or your modified version of it. I’ve explained the principles involved, and welcome feedback of anything I might have missed. :)

Export from database to file

CREATE FUNCTION export_temp_table_to_csv_file(table_name text, file_name text)

RETURNS VOID

LANGUAGE plpgsql

-- The magic ingredient: Anyone who can execute this can do so with superuser privileges,

-- as long as the function was created while logged in as a superuser.

SECURITY DEFINER

AS $BODY$

DECLARE

-- These must be as restrictive as possible, for security reasons

-- Hard-coded directory to which all CSV files will be exported

file_path text := '/var/my_application/csv_output/';

-- File names must contain only alphanumerics, dashes and underscores,

-- and all must end in the extension .csv

file_name_regex text := E'^[a-zA-Z0-9_-]+\\.csv$';

-- Only allow exports of tables whose names begin 'temp_export_',

-- indicating that they are Temporary Tables created for the purpose

table_name_regex text := '^temp_export_[a-z_]+$';

BEGIN

-- Sanity check input

IF

table_name !~ table_name_regex

THEN

RAISE EXCEPTION 'Invalid temp table name (% doesn''t match %)', table_name, table_name_regex;

END IF;

IF

file_name !~ file_name_regex

THEN

RAISE EXCEPTION 'Invalid data file name (% doesn''t match %)', file_name, file_name_regex;

END IF;

-- OK? Go!

-- Make sure there's zero chance of SQL injection here

EXECUTE '

COPY

' || quote_ident(table_name) || '

TO

' || quote_literal(file_path || file_name) || '

WITH (

FORMAT CSV, HEADER

);

';

END;

$BODY$;

-- Don't let just anyone do this privileged thing

REVOKE ALL ON FUNCTION export_temp_table_to_csv_file( table_name text, file_name text )

FROM PUBLIC;

GRANT EXECUTE ON FUNCTION export_temp_table_to_csv_file( table_name text, file_name text )

TO group_csv_exporters;

Now, your application will need to:

- open a normal, non-superuser, connection to the database

- create an appropriately named temp table, with the relevant column names and types

- insert whatever data it needs to export into the temp table

- run the function, e.g.

SELECT export_temp_table_to_csv_file('temp_export_foo', 'foo.csv'); - do whatever needs to be done with the file this creates on the DB server

Import from file to database

CREATE FUNCTION import_csv_file_to_temp_table(table_name text, file_name text)

RETURNS VOID

LANGUAGE plpgsql

-- The magic ingredient: Anyone who can execute this can do so with superuser privileges,

-- as long as the function was created while logged in as a superuser.

SECURITY DEFINER

AS $BODY$

DECLARE

-- These must be as restrictive as possible, for security reasons

-- Hard-coded directory in which all CSV files to import will be placed

file_path text := '/var/my_application/csv_input/';

-- File names must contain only alphanumerics, dashes and underscores,

-- and all must end in the extension .csv

file_name_regex text := E'^[a-zA-Z0-9_-]+\\.csv$';

-- Only allow imports to tables whose names begin 'temp_csv_',

-- indicating that they are Temporary Tables created for the purpose

table_name_regex text := '^temp_import_[a-z_]+$';

BEGIN

-- Sanity check input

IF

table_name !~ table_name_regex

THEN

RAISE EXCEPTION 'Invalid temp table name (% doesn''t match %)', table_name, table_name_regex;

END IF;

IF

file_name !~ file_name_regex

THEN

RAISE EXCEPTION 'Invalid data file name (% doesn''t match %)', file_name, file_name_regex;

END IF;

-- OK? Go!

-- Make sure there's zero chance of SQL injection here

EXECUTE '

COPY

' || quote_ident(table_name) || '

FROM

' || quote_literal(file_path || file_name) || '

WITH (

FORMAT CSV, HEADER

);

';

END;

$BODY$;

-- Don't let just anyone do this privileged thing

REVOKE ALL ON FUNCTION import_csv_file_to_temp_table( table_name text, file_name text )

FROM PUBLIC;

GRANT EXECUTE ON FUNCTION import_csv_file_to_temp_table( table_name text, file_name text )

TO group_csv_importers;

Now, your application will need to:

- prepare a CSV file in the appropriate directory on the database server

- open a normal, non-superuser, connection

- create an appropriately named temp table, with the relevant column names and types

- run the function, e.g.

SELECT import_csv_file_to_temp_table('temp_import_foo', 'foo.csv'); - do whatever it needs to do with the data in the temp table

I think there are a couple more holes to plug…

SECURITY DEFINER functions still respect the caller’s search path. So I could write my own function called public.quote_ident(text), change my search_path to ‘public,pg_catalog’, and have my code executed as superuser. Qualifying every function and operator call will fix this, though it’s a little awkward (each “||” becomes “OPERATOR(pg_catalog.||)”). Alternatively, you can add “SET search_path TO pg_catalog,pg_temp[,…]” to your function header (you can append more schemas to this path as necessary, or just force callers to qualify their table_name).

The other issue is that the caller may still lack permissions for the table in question. Naming conventions might be “good enough” in most cases, but it’s still a flaw. I *think* you can solve this with calls to “has_table_privilege(session_user,table_name,)” (though there may be more loopholes I’m not aware of). “session_user” in the context of a SECURITY DEFINER function still identifies the “real” user (as opposed to “current_user”, which gives you the effective role, i.e. the definer). And this privilege check will implicitly validate your table name, so you should be able to throw away your regex without fear of injection attacks.

Thanks for the tips! I hadn’t thought of shadowing something as basic as quote_ident(), or the || operator, but I guess there’s no reason you *wouldn’t* be able to, apart from needing to be devious enough to think of it. I’ll play around and update my sample functions to use explicit search paths.

As for permissions, I guess it depends on your situation and use case. With a particularly restrictive naming convention (could even be a whitelist of table names), the function could effectively act as an *extra* permission that needs to be granted – “Insert” needn’t imply “Bulk insert”, and “Select” needn’t imply “Dump to disk”. And of course, the whole point of the “superuser only” restriction is that on the filesystem side, we *can’t* inherit from any existing permissions, so all we have to go on is the file path string.

Good tip with session_user vs current_user though; I can see various ways that could come in handy.

You’re right, naming restrictions can still serve a purpose, but it’s certainly worth doing the privilege check as well, just to be certain that these functions can never be used to circumvent permissions.

By the way, there’s a nice variation which lets you lock down your search path, without requiring callers to specify a schema: declare the table_name parameter as “regclass” instead of “text”. It will be implicitly cast to/from text as needed, but it forces Postgres to resolve the table name according to the caller’s search path.

Hi, this is amazing piece, you really stressed the importance not generalising which was the first thing I in countered when searching around for uses of COPY function.

Thanks for taking the time to right this down.

How would this work for stdin and stdout, or is it going to work as stdin and stdout is client side.

Copy from stdin or to stdout doesn’t need the same security restrictions, because the data is being provided from the client, just like a standard INSERT.